The serverless landscape has split into two distinct camps: traditional serverless (AWS Lambda, Google Cloud Functions) and edge functions (Cloudflare Workers, Vercel Edge, Deno Deploy). Both promise "just deploy code, we handle infrastructure" — but they make very different tradeoffs.

Choosing wrong means either overpaying for capabilities you do not need or hitting limitations that require rewriting your application.

Edge functions and traditional serverless serve different use cases

Edge functions and traditional serverless serve different use cases

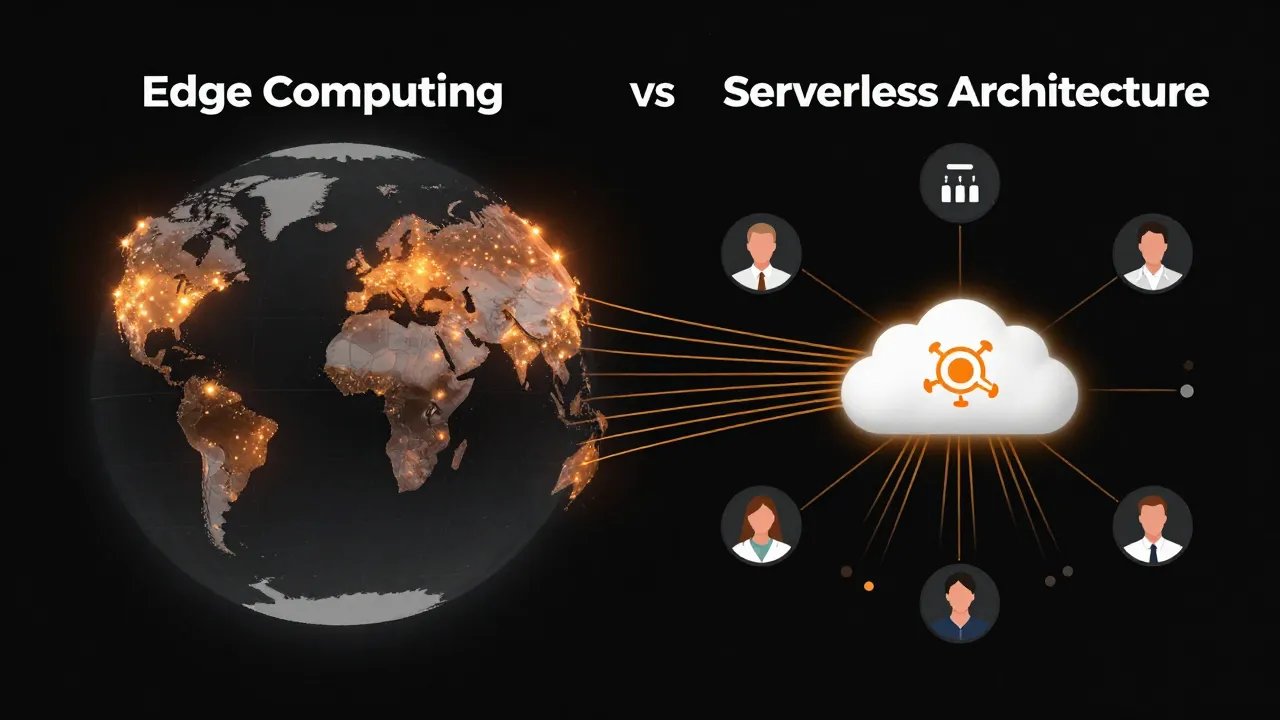

The Core Difference

Traditional Serverless runs in centralized cloud regions. Your function executes in us-east-1 or eu-west-1, and users worldwide connect to that region.

Edge Functions run at the network edge, in 200+ locations worldwide. Your function executes in the datacenter closest to each user.

Request Flow: Traditional Serverless

User (Tokyo) → CDN → us-east-1 Lambda → Database (us-east-1)

Latency: 150-300ms round trip

Request Flow: Edge Function

User (Tokyo) → Tokyo Edge → (optional) Origin

Latency: 10-50ms for edge-only, 150-300ms if origin neededPlatform Comparison

| Feature | AWS Lambda | Cloudflare Workers | Vercel Edge | Deno Deploy |

|---|---|---|---|---|

| Cold start | 100-3000ms | 0-5ms | 0-10ms | 0-10ms |

| Execution limit | 15 min | 30s (paid: unlimited) | 30s | 50ms (free), higher paid |

| Memory | Up to 10GB | 128MB | 128MB | 512MB |

| Languages | Many | JS/TS/WASM | JS/TS | JS/TS |

| Database access | Any (VPC) | Limited (D1, KV, external) | Limited | Limited |

| Regions | 20+ | 300+ | 100+ | 35+ |

| Pricing model | Per-request + duration | Per-request | Per-request | Per-request |

When to Use Edge Functions

Edge functions excel when:

1. Latency Is Critical

For user-facing requests where every millisecond matters:

// Vercel Edge Function - personalized content

export const config = { runtime: 'edge' }

export default function handler(request: Request) {

const country = request.headers.get('x-vercel-ip-country')

const content = getLocalizedContent(country)

return new Response(content)

}Use cases:

- A/B testing and feature flags

- Personalization based on location

- Authentication checks

- Request routing

2. You Need Global Distribution

If your users are worldwide and latency consistency matters:

| Region | Lambda (us-east-1) | Edge Function |

|---|---|---|

| New York | 20ms | 15ms |

| London | 100ms | 15ms |

| Tokyo | 200ms | 15ms |

| Sydney | 250ms | 20ms |

3. Simple Compute, No Database

Edge functions work best for stateless operations:

- URL rewriting and redirects

- Header manipulation

- Request/response transformation

- Rate limiting

- Bot detection

4. Cost Optimization at Scale

Edge functions are often cheaper for high-volume, low-compute workloads:

Cost Comparison: 100M requests/month, 50ms average

├── AWS Lambda: ~$200 + data transfer

├── Cloudflare Workers: ~$50 (Paid plan)

└── Vercel Edge: ~$100 (Pro plan)When to Use Traditional Serverless

Traditional serverless wins when:

1. You Need Significant Compute

Memory-intensive or CPU-intensive workloads:

# AWS Lambda - image processing

import boto3

from PIL import Image

def handler(event, context):

# Process 50MB image - needs memory

image = Image.open(download_from_s3(event['key']))

processed = heavy_processing(image)

upload_to_s3(processed)

return {'status': 'complete'}Lambda offers up to 10GB RAM and 6 vCPUs. Edge functions cap at 128MB.

2. Long-Running Operations

Operations that take more than 30 seconds:

- Video transcoding

- Large file processing

- Batch operations

- ML inference

Lambda supports up to 15 minutes. Edge functions timeout at 30 seconds (or less).

3. Database Connections

Traditional serverless integrates better with databases:

// Lambda with RDS connection

const { Pool } = require('pg')

const pool = new Pool({ connectionString: process.env.DATABASE_URL })

exports.handler = async (event) => {

const result = await pool.query('SELECT * FROM users WHERE id = $1', [event.id])

return result.rows[0]

}Edge functions have limited database options — mainly HTTP-based databases or edge-specific solutions like Cloudflare D1.

4. Complex Dependencies

If you need native modules, large libraries, or custom runtimes:

- Scientific computing (NumPy, SciPy)

- Machine learning (TensorFlow, PyTorch)

- Image/video processing (FFmpeg, ImageMagick)

Edge functions have strict bundle size limits (1-5MB typically).

The Hybrid Pattern

Most production applications use both:

Hybrid Architecture

├── Edge Layer (Cloudflare Workers / Vercel Edge)

│ ├── Authentication verification

│ ├── Rate limiting

│ ├── Request routing

│ ├── Static content personalization

│ └── Cache management

│

├── API Layer (Lambda / Cloud Functions)

│ ├── Business logic

│ ├── Database operations

│ ├── Third-party integrations

│ └── Heavy computation

│

└── Database Layer

├── Primary database (RDS, Cloud SQL)

├── Cache (Redis, Memcached)

└── Edge cache (KV, D1)The edge handles initial request processing. Traditional serverless handles complex operations.

Platform Deep Dives

Cloudflare Workers

Strengths:

- Largest edge network (300+ locations)

- Zero cold starts (V8 isolates)

- Integrated ecosystem (KV, D1, R2, Queues)

- Best pricing at scale

Limitations:

- 128MB memory limit

- JavaScript/TypeScript/WASM only

- Limited Node.js API compatibility

Best for: High-traffic sites, global APIs, real-time applications

Vercel Edge Functions

Strengths:

- Seamless Next.js integration

- Good developer experience

- Automatic edge/serverless routing

Limitations:

- Tied to Vercel platform

- Smaller edge network than Cloudflare

- Higher pricing than Cloudflare

Best for: Next.js applications, frontend-focused teams

Deno Deploy

Strengths:

- Native TypeScript support

- Modern runtime (Deno)

- Good Node.js compatibility

Limitations:

- Smaller network (35 regions)

- Less mature ecosystem

- Free tier limitations

Best for: Deno projects, TypeScript-first teams

AWS Lambda

Strengths:

- Most mature platform

- Broadest language support

- Deep AWS integration

- Highest resource limits

Limitations:

- Cold starts (mitigated with provisioned concurrency)

- Regional deployment requires more work

- Complex pricing

Best for: Complex backends, AWS-centric architectures, heavy compute

Decision Framework

Ask these questions:

1. What is your latency requirement?

- Under 50ms globally → Edge functions

- Under 100ms regionally → Either

- Latency tolerant → Traditional serverless

2. How much compute do you need?

- Under 128MB RAM, under 30s → Edge functions

- More → Traditional serverless

3. Do you need database access?

- Simple KV or document store → Edge (with caveats)

- Relational or complex queries → Traditional serverless

4. What is your traffic pattern?

- High volume, simple operations → Edge functions

- Variable volume, complex operations → Traditional serverless

5. What is your team's expertise?

- Frontend/JavaScript → Edge functions

- Backend/Multiple languages → Traditional serverless

The Future

The line between edge and traditional serverless is blurring:

- Edge databases (D1, Turso, Neon Edge) are improving

- Edge functions are getting more resources

- Traditional serverless is getting faster cold starts

- Hybrid routing is becoming automatic

By 2027, the distinction may matter less as platforms converge. For now, understanding the tradeoffs helps you choose the right tool for each part of your application.

Comments