Physical AI—the term NVIDIA coined at CES 2026—represents a fundamental shift in how we build intelligent systems that interact with the real world. For software developers, this opens up entirely new application categories that were previously the domain of specialized robotics engineers.

This guide will help you understand the landscape and start building with Physical AI.

What is Physical AI?

Physical AI refers to AI systems that can:

- Understand physical environments - 3D spatial awareness, object recognition

- Predict physical outcomes - How objects will move, interact, fall

- Plan physical actions - Navigation, manipulation, coordination

- Execute in the real world - Control robots, vehicles, machines

Unlike traditional AI that operates on text, images, or code, Physical AI must deal with the messiness of reality: gravity, friction, unexpected obstacles, and real-time constraints.

The Software Stack

┌─────────────────────────────────────────────────────────────┐

│ Physical AI Stack │

├─────────────────────────────────────────────────────────────┤

│ │

│ Application Layer │

│ ├── Task Planning (What to do) │

│ ├── Motion Planning (How to move) │

│ └── Behavior Control (When to act) │

│ │

│ Foundation Models │

│ ├── NVIDIA Cosmos (World simulation) │

│ ├── Vision-Language Models (Scene understanding) │

│ └── Manipulation Models (Grasping, handling) │

│ │

│ Simulation & Training │

│ ├── Isaac Sim (NVIDIA) │

│ ├── Gazebo (Open source) │

│ └── MuJoCo (DeepMind) │

│ │

│ Robot Operating System │

│ ├── ROS 2 (Standard framework) │

│ ├── Navigation Stack │

│ └── MoveIt 2 (Manipulation) │

│ │

│ Hardware Abstraction │

│ ├── Motor controllers │

│ ├── Sensor interfaces │

│ └── Safety systems │

│ │

└─────────────────────────────────────────────────────────────┘

NVIDIA Cosmos Foundation Model

Cosmos is NVIDIA's foundation model for Physical AI, designed to simulate realistic physical environments.

Key Capabilities

| Feature | Description | Use Case |

|---|---|---|

| World Generation | Create diverse 3D environments | Training data generation |

| Physics Simulation | Accurate physical interactions | Robot behavior testing |

| Synthetic Data | Photo-realistic rendering | Computer vision training |

| Scenario Variation | Randomize conditions | Robust policy learning |

Getting Started with Cosmos

# Note: API is illustrative based on announced capabilities

from nvidia_cosmos import CosmosClient, WorldConfig, AgentConfig

# Initialize Cosmos client

cosmos = CosmosClient(api_key="your-api-key")

# Define a warehouse environment

world_config = WorldConfig(

template="indoor_warehouse",

size=(50, 50, 10), # meters

features={

"shelving_units": 20,

"pallets": 50,

"obstacles": "random",

"lighting": "industrial"

},

physics={

"gravity": 9.81,

"friction_coefficient": 0.6

}

)

# Create the simulation world

world = cosmos.create_world(world_config)

# Define robot agent

robot_config = AgentConfig(

model="mobile_manipulator",

sensors=["lidar", "rgbd_camera", "force_torque"],

capabilities=["navigation", "manipulation"]

)

# Spawn robot in the world

robot = world.spawn_agent(robot_config, position=(0, 0, 0))

# Generate training scenarios

scenarios = world.generate_scenarios(

task="pick_and_place",

variations=1000,

difficulty_range=(0.3, 0.9)

)

# Export for training

cosmos.export_dataset(

scenarios,

format="isaac_sim",

output_path="./training_data"

)Simulation Environments

NVIDIA Isaac Sim

Isaac Sim is the primary simulation environment for robotics development with NVIDIA hardware.

# Isaac Sim scene setup

from omni.isaac.core import World

from omni.isaac.core.robots import Robot

from omni.isaac.sensor import Camera, Lidar

# Create simulation world

world = World(stage_units_in_meters=1.0)

# Add ground plane

world.scene.add_default_ground_plane()

# Load a robot model

robot = world.scene.add(

Robot(

prim_path="/World/Robot",

name="my_robot",

usd_path="/path/to/robot.usd"

)

)

# Add sensors

camera = Camera(

prim_path="/World/Robot/camera",

resolution=(640, 480),

frequency=30

)

lidar = Lidar(

prim_path="/World/Robot/lidar",

rotation_frequency=10,

horizontal_fov=360,

vertical_fov=30

)

# Simulation loop

while simulation_running:

world.step(render=True)

# Get sensor data

rgb_image = camera.get_rgb()

depth_image = camera.get_depth()

lidar_points = lidar.get_point_cloud()

# Run your AI policy

action = policy.predict(rgb_image, depth_image, lidar_points)

# Apply action to robot

robot.apply_action(action)Gazebo (Open Source Alternative)

For those who prefer open-source tools:

# ROS 2 + Gazebo setup

import rclpy

from rclpy.node import Node

from geometry_msgs.msg import Twist

from sensor_msgs.msg import LaserScan, Image

class RobotController(Node):

def __init__(self):

super().__init__('robot_controller')

# Publishers

self.cmd_vel_pub = self.create_publisher(

Twist, '/cmd_vel', 10

)

# Subscribers

self.lidar_sub = self.create_subscription(

LaserScan, '/scan', self.lidar_callback, 10

)

self.camera_sub = self.create_subscription(

Image, '/camera/image_raw', self.camera_callback, 10

)

# Control loop

self.timer = self.create_timer(0.1, self.control_loop)

def lidar_callback(self, msg):

self.latest_scan = msg

def camera_callback(self, msg):

self.latest_image = msg

def control_loop(self):

# Your AI policy here

if hasattr(self, 'latest_scan') and hasattr(self, 'latest_image'):

action = self.policy.predict(

self.latest_scan,

self.latest_image

)

cmd = Twist()

cmd.linear.x = action.linear_velocity

cmd.angular.z = action.angular_velocity

self.cmd_vel_pub.publish(cmd)

def main():

rclpy.init()

controller = RobotController()

rclpy.spin(controller)

rclpy.shutdown()

ROS 2 Integration Patterns

ROS 2 (Robot Operating System 2) is the standard framework for robotics development.

Project Structure

my_robot_project/

├── src/

│ ├── my_robot_description/ # Robot URDF/SDF models

│ │ ├── urdf/

│ │ ├── meshes/

│ │ └── config/

│ ├── my_robot_bringup/ # Launch files

│ │ ├── launch/

│ │ └── config/

│ ├── my_robot_control/ # Control nodes

│ │ ├── my_robot_control/

│ │ └── setup.py

│ └── my_robot_ai/ # AI components

│ ├── my_robot_ai/

│ │ ├── perception.py

│ │ ├── planning.py

│ │ └── policy.py

│ └── setup.py

├── docker/

│ └── Dockerfile

└── README.mdAI-Powered Navigation Node

# my_robot_ai/policy.py

import rclpy

from rclpy.node import Node

from nav_msgs.msg import OccupancyGrid, Path

from geometry_msgs.msg import PoseStamped

import torch

from .models import NavigationPolicy

class AINavigationNode(Node):

def __init__(self):

super().__init__('ai_navigation')

# Load trained policy

self.policy = NavigationPolicy.load('models/nav_policy.pt')

self.policy.eval()

# ROS interfaces

self.goal_sub = self.create_subscription(

PoseStamped, '/goal_pose', self.goal_callback, 10

)

self.map_sub = self.create_subscription(

OccupancyGrid, '/map', self.map_callback, 10

)

self.path_pub = self.create_publisher(

Path, '/planned_path', 10

)

self.get_logger().info('AI Navigation node initialized')

def goal_callback(self, goal_msg):

if not hasattr(self, 'current_map'):

self.get_logger().warn('No map received yet')

return

# Prepare inputs for policy

map_tensor = self.preprocess_map(self.current_map)

goal_tensor = self.preprocess_goal(goal_msg)

# Run inference

with torch.no_grad():

path_waypoints = self.policy(map_tensor, goal_tensor)

# Convert to ROS Path message

path_msg = self.waypoints_to_path(path_waypoints)

self.path_pub.publish(path_msg)

def map_callback(self, map_msg):

self.current_map = map_msg

def preprocess_map(self, map_msg):

# Convert OccupancyGrid to tensor

width = map_msg.info.width

height = map_msg.info.height

data = torch.tensor(map_msg.data).reshape(height, width)

return data.unsqueeze(0).unsqueeze(0).float() / 100.0

def preprocess_goal(self, goal_msg):

return torch.tensor([

goal_msg.pose.position.x,

goal_msg.pose.position.y,

goal_msg.pose.orientation.z,

goal_msg.pose.orientation.w

]).unsqueeze(0)

def waypoints_to_path(self, waypoints):

path = Path()

path.header.frame_id = 'map'

path.header.stamp = self.get_clock().now().to_msg()

for wp in waypoints:

pose = PoseStamped()

pose.header = path.header

pose.pose.position.x = float(wp[0])

pose.pose.position.y = float(wp[1])

path.poses.append(pose)

return pathFrom Simulation to Real-World Deployment

The biggest challenge in robotics is the "sim-to-real" gap—policies that work in simulation often fail in the real world.

Domain Randomization

Train with variations to build robust policies:

class DomainRandomizer:

def __init__(self):

self.randomization_params = {

'lighting': {'intensity': (0.3, 1.5), 'color_temp': (3000, 7000)},

'physics': {'friction': (0.4, 0.8), 'mass_scale': (0.9, 1.1)},

'sensor': {'noise_std': (0.0, 0.05), 'dropout': (0.0, 0.1)},

'geometry': {'scale': (0.95, 1.05), 'position_offset': (-0.02, 0.02)}

}

def randomize_environment(self, env):

# Randomize lighting

env.set_lighting(

intensity=random.uniform(*self.randomization_params['lighting']['intensity']),

color_temp=random.uniform(*self.randomization_params['lighting']['color_temp'])

)

# Randomize physics

for obj in env.get_objects():

obj.friction = random.uniform(*self.randomization_params['physics']['friction'])

obj.mass *= random.uniform(*self.randomization_params['physics']['mass_scale'])

# Add sensor noise

env.sensor_noise_std = random.uniform(*self.randomization_params['sensor']['noise_std'])

return env

# Training loop with domain randomization

randomizer = DomainRandomizer()

for episode in range(num_episodes):

env = randomizer.randomize_environment(base_env)

observation = env.reset()

while not done:

action = policy(observation)

observation, reward, done, info = env.step(action)

# Update policy...Reality Gap Mitigation

class SimToRealAdapter:

"""Adapts simulation-trained policies for real-world deployment"""

def __init__(self, sim_policy, calibration_data):

self.sim_policy = sim_policy

self.calibration = self.compute_calibration(calibration_data)

def compute_calibration(self, data):

"""Learn systematic differences between sim and real"""

# Compute observation normalization

obs_mean = np.mean(data['observations'], axis=0)

obs_std = np.std(data['observations'], axis=0)

# Compute action scaling

action_scale = data['real_action_scale'] / data['sim_action_scale']

return {

'obs_mean': obs_mean,

'obs_std': obs_std,

'action_scale': action_scale

}

def predict(self, real_observation):

# Normalize observation to match simulation distribution

normalized_obs = (real_observation - self.calibration['obs_mean']) / self.calibration['obs_std']

# Get action from simulation-trained policy

sim_action = self.sim_policy.predict(normalized_obs)

# Scale action for real hardware

real_action = sim_action * self.calibration['action_scale']

# Apply safety limits

real_action = np.clip(real_action, self.action_limits[0], self.action_limits[1])

return real_action

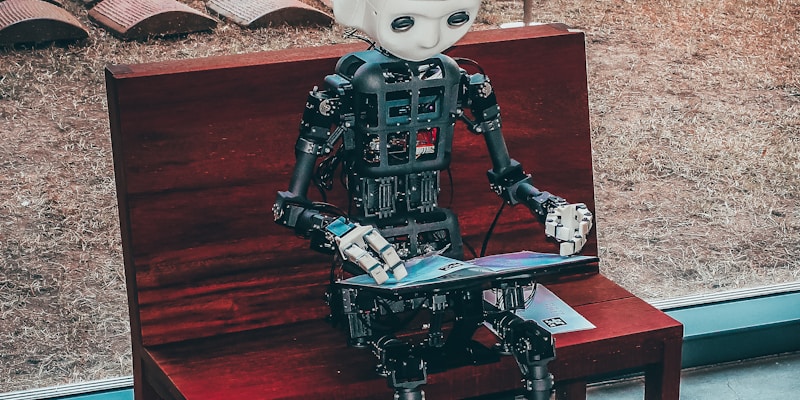

LG's Humanoid Robot: A Case Study

At CES 2026, LG unveiled a humanoid robot designed for household tasks. Here's what we can learn from their approach:

Architecture Highlights

- Modular task system - Each task (laundry, dishes) is a separate module

- Hierarchical control - High-level planning separate from motor control

- Human interaction layer - Natural language understanding for commands

- Safety-first design - Multiple redundant safety systems

Simplified Task Architecture

class HouseholdRobot:

def __init__(self):

self.perception = PerceptionModule()

self.planner = TaskPlanner()

self.manipulator = ManipulationController()

self.navigator = NavigationController()

self.safety = SafetyMonitor()

async def execute_task(self, natural_language_command: str):

# Parse command

task = await self.parse_command(natural_language_command)

# Plan task sequence

plan = await self.planner.create_plan(task, self.perception.get_scene())

# Execute with safety monitoring

for step in plan.steps:

# Check safety before each step

if not self.safety.is_safe_to_proceed():

await self.handle_safety_stop()

continue

if step.type == 'navigate':

await self.navigator.go_to(step.target)

elif step.type == 'manipulate':

await self.manipulator.execute(step.action)

elif step.type == 'wait':

await asyncio.sleep(step.duration)

# Verify step completion

if not await self.verify_step(step):

await self.handle_step_failure(step)

async def parse_command(self, command: str):

# Use LLM to parse natural language to structured task

response = await self.llm.parse(

prompt=f"Parse this household task: {command}",

output_format=TaskSchema

)

return responseGetting Started Resources

Development Environment Setup

# Install ROS 2 Humble (Ubuntu 22.04)

sudo apt update && sudo apt install -y curl gnupg lsb-release

sudo curl -sSL https://raw.githubusercontent.com/ros/rosdistro/master/ros.key -o /usr/share/keyrings/ros-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/ros-archive-keyring.gpg] http://packages.ros.org/ros2/ubuntu $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/ros2.list > /dev/null

sudo apt update && sudo apt install -y ros-humble-desktop

# Install simulation tools

sudo apt install -y ros-humble-gazebo-ros-pkgs

pip install nvidia-isaac-sim # Requires NVIDIA GPU

# Install AI/ML dependencies

pip install torch torchvision

pip install stable-baselines3 # RL algorithms

pip install gymnasium # Environment interfaceRecommended Learning Path

- Week 1-2: ROS 2 basics (nodes, topics, services)

- Week 3-4: Simulation with Gazebo

- Week 5-6: Computer vision for robotics

- Week 7-8: Navigation and path planning

- Week 9-10: Manipulation basics

- Week 11-12: RL for robotics

- Ongoing: Isaac Sim and Cosmos integration

Key Takeaways

- Physical AI is software - You don't need a hardware background to start

- Simulation is essential - Train and test before touching real robots

- ROS 2 is the standard - Learn it to work in the ecosystem

- Domain randomization helps bridge sim-to-real gap

- Start with navigation - It's the most accessible entry point

Resources

- NVIDIA Isaac Sim Documentation

- ROS 2 Humble Documentation

- Gazebo Simulation

- MoveIt 2 for Manipulation

- OpenAI Gym / Gymnasium

Interested in robotics development? Join the CODERCOPS community to connect with other developers exploring Physical AI.

Comments