When one of Wall Street's most storied institutions goes all-in on AI, the entire enterprise technology world pays attention. In early February 2026, Goldman Sachs revealed its partnership with Anthropic to build autonomous AI agents powered by Claude, targeting two of banking's most process-heavy functions: trade accounting and client compliance. With over 12,000 developers and thousands of back-office staff now using Claude, this is one of the most significant enterprise AI deployments in financial services to date.

This is not a story about a company slapping an AI chatbot on its intranet. Goldman's approach, embedding Anthropic engineers directly inside the bank for six months to co-develop purpose-built AI agents, offers a blueprint that other enterprises would be wise to study.

The Partnership Model: Embedded Engineering

The most distinctive aspect of Goldman's deployment is the partnership structure. Rather than purchasing an off-the-shelf AI product and customizing it internally, Goldman embedded Anthropic engineers directly into its operations for six months. These engineers worked alongside Goldman's own developers to co-create AI agents tailored specifically for financial services workflows.

This approach offers several advantages:

- Domain expertise transfer: Anthropic engineers learned the nuances of financial compliance and trade processing, while Goldman developers gained deep expertise in Claude's architecture and capabilities.

- Custom optimization: The agents were built from the ground up for Goldman's specific workflows, rather than being generic tools adapted after the fact.

- Security alignment: Having Anthropic engineers inside Goldman's security perimeter meant that sensitive architectural decisions could be made collaboratively, with both teams understanding the constraints.

For enterprises considering large-scale AI adoption, this embedded partnership model may be more effective than the traditional vendor-customer relationship. The initial investment is higher, but the resulting product is significantly more tailored and effective.

What the AI Agents Actually Do

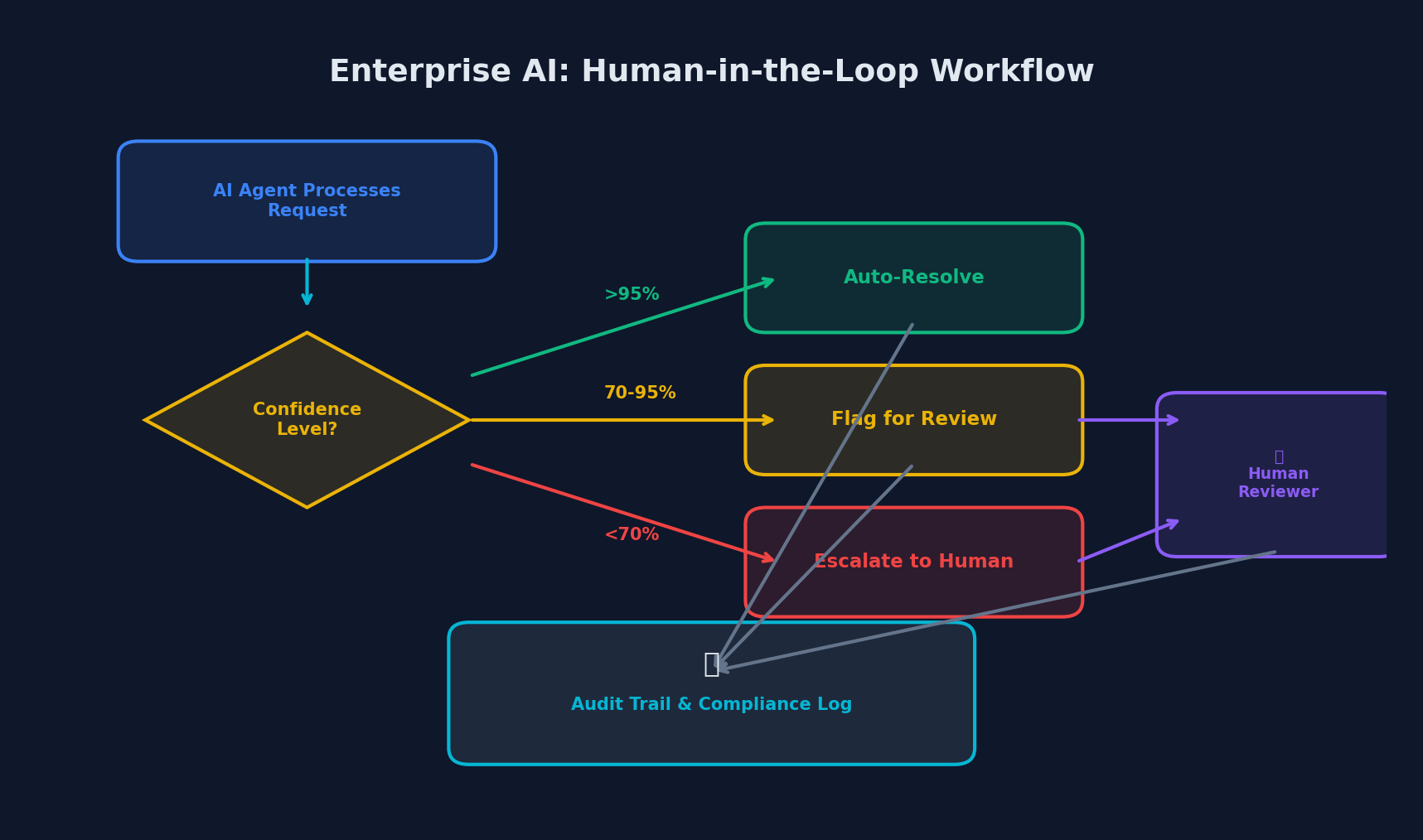

The human-in-the-loop workflow: AI auto-resolves high-confidence decisions, escalates uncertain ones

The human-in-the-loop workflow: AI auto-resolves high-confidence decisions, escalates uncertain ones

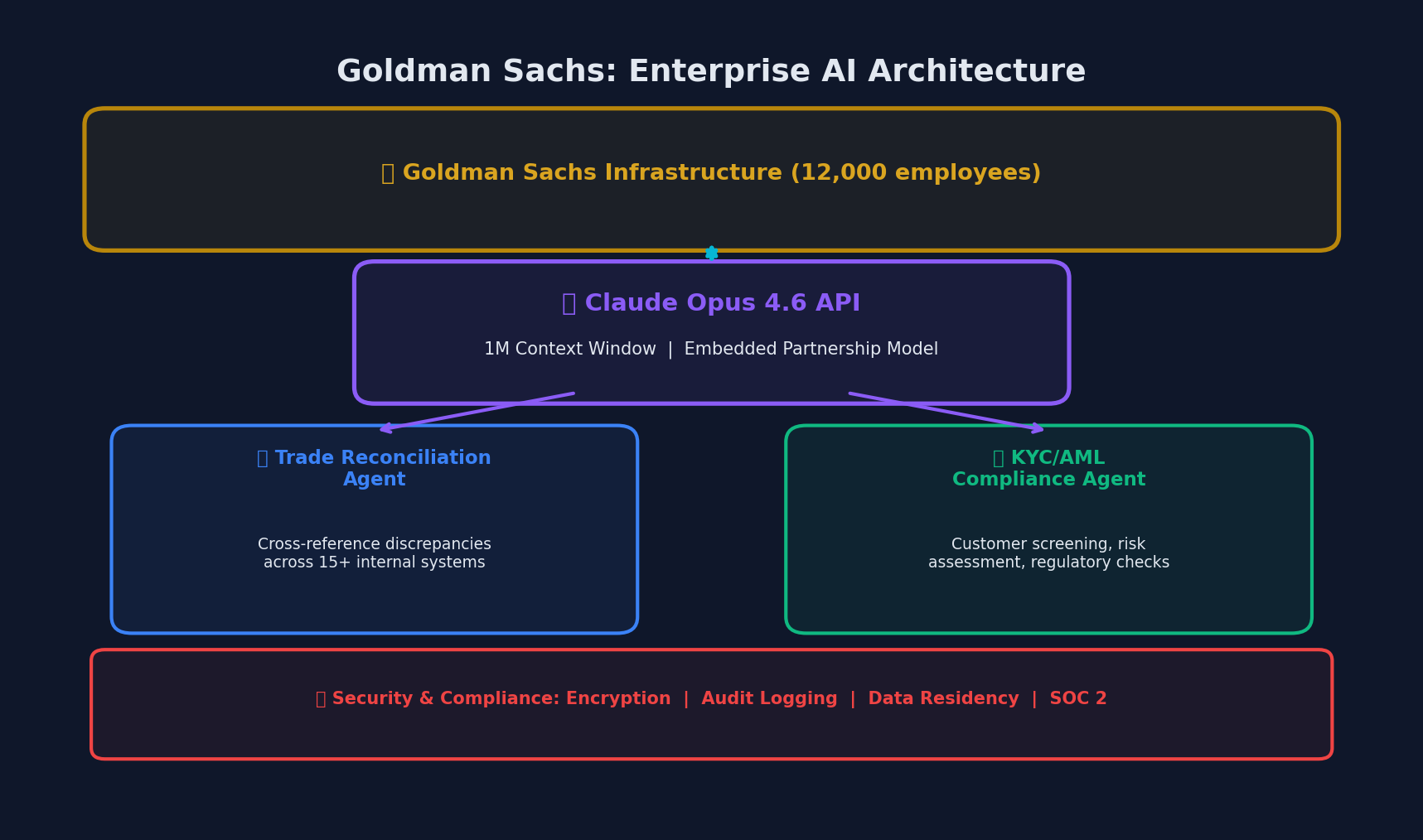

Goldman's AI agents operate in two primary domains, both of which involve massive scale and regulatory complexity.

Trade Accounting Automation

Goldman Sachs processes millions of trades and transactions annually. Each transaction must be recorded, reconciled, and verified across multiple systems. Historically, this involved significant manual effort by teams of accountants and operations staff.

The Claude-powered agent handles:

- Transaction matching: Reviewing millions of records across internal and external systems, matching trades to confirmations, and identifying discrepancies

- Exception handling: When mismatches are found, the agent categorizes them by type and severity, routes them to the appropriate human reviewer, and provides context to accelerate resolution

- Regulatory reporting: Generating required reports for regulators with accurate data aggregation and formatting

The scale of this task is what makes it compelling for AI. Humans reviewing millions of transactions inevitably make errors due to fatigue and volume. An AI agent processing the same data operates at consistent accuracy regardless of volume, while flagging the edge cases that genuinely require human judgment.

Client Vetting and Onboarding (KYC/AML)

Know Your Customer (KYC) and Anti-Money Laundering (AML) compliance is one of the most resource-intensive functions in banking. Onboarding a single institutional client can involve weeks of document review, background checks, and regulatory verification.

The Claude-powered agent handles:

- Document analysis: Reading and interpreting corporate documents, identification records, and financial statements

- Regulatory rule application: Applying KYC and AML regulatory requirements across jurisdictions, interpreting policy language, and executing multi-step verification processes

- Risk scoring: Generating initial risk assessments based on documented criteria, with human analysts reviewing and approving the final determinations

Internal testing showed this AI-powered process reduced client onboarding times by 30%, a significant improvement in a process that directly impacts revenue generation.

Technical Architecture

Goldman Sachs' enterprise AI architecture: Claude Opus 4.6 powering trade reconciliation and compliance

Goldman Sachs' enterprise AI architecture: Claude Opus 4.6 powering trade reconciliation and compliance

While Goldman has not disclosed its full technical stack, the available information reveals a thoughtful architecture:

Model Selection

The deployment uses Claude Opus 4.6 with a 1-million-token context window. This large context window is critical for financial applications where a single decision might require reviewing dozens of documents simultaneously. The choice of Claude was deliberate; Goldman selected Anthropic over competitors like OpenAI due to what they described as Anthropic's strength in safety, interpretability, and reliability for regulated industries.

Agent Design Pattern

The agents are designed as autonomous multi-step systems, not simple question-answering chatbots. A typical agent workflow might look like this:

Trade Reconciliation Agent Flow:

1. Receive batch of unreconciled transactions

2. Query internal trade management system for details

3. Query counterparty confirmation system

4. Match transactions using multi-criteria comparison

5. Identify discrepancies by category:

- Price differences

- Quantity mismatches

- Settlement date conflicts

- Missing confirmations

6. For each discrepancy:

a. Assess severity (auto-resolvable vs. needs human review)

b. If auto-resolvable: apply correction and log

c. If needs review: create exception ticket with context

7. Generate reconciliation report

8. Update audit trailThis is a pattern that scales well: the AI handles the volume work while humans focus on genuine exceptions and complex decisions.

Security and Compliance

For a bank managing $2.5 trillion in assets under supervision, security is non-negotiable. The deployment includes:

- Data isolation: All processing occurs within Goldman's own infrastructure, with no data leaving the bank's security perimeter

- Audit trails: Every AI decision is logged with full traceability, meeting regulatory requirements for explainability

- Human-in-the-loop: Critical decisions require human approval; the AI makes recommendations but does not execute unilateral actions on high-risk tasks

- Model monitoring: Continuous monitoring of AI outputs for accuracy, bias, and drift

Results and Performance

The early results from Goldman's deployment are compelling:

| Metric | Improvement |

|---|---|

| Client onboarding time | 30% reduction |

| Developer productivity | 20%+ improvement |

| Manual labor hours | Thousands saved weekly |

| Transaction processing | Consistent accuracy at scale |

Goldman's CIO Marco Argenti noted that while the bank employs thousands of people in the compliance and accounting functions where AI agents will operate, it is "premature" to expect the technology to lead to job losses for those workers. Instead, the goal is to augment human capacity, allowing existing staff to handle more complex work while the AI manages routine processing.

Lessons for Enterprise AI Adoption

Goldman's deployment offers several actionable lessons for other enterprises considering large-scale AI adoption.

1. Start with High-Volume, Rule-Based Processes

Goldman did not start by trying to replace its investment bankers or traders. They targeted functions that are high-volume, heavily rule-based, and where errors are costly but patterns are identifiable. Trade reconciliation and KYC compliance fit this profile perfectly.

For your organization, identify processes that match these criteria:

- High volume: Thousands or millions of repetitive actions

- Rule-based: Clear criteria for correct vs. incorrect outcomes

- Costly errors: Mistakes have financial, regulatory, or reputational consequences

- Human bottlenecks: Current processes are limited by the speed and availability of human reviewers

2. Invest in the Partnership, Not Just the Product

The embedded engineering model is more expensive and complex than a standard software purchase. But for mission-critical deployments, the customization and domain alignment it produces can be the difference between a successful deployment and an expensive experiment.

If you cannot embed vendor engineers directly, at minimum:

- Assign dedicated internal engineers to the AI project full-time

- Ensure your team has deep access to the AI vendor's technical team

- Build domain-specific evaluation datasets before deployment

- Plan for a 3-6 month development cycle before production use

3. Design for Auditability from Day One

In regulated industries, the ability to explain why an AI made a specific decision is not optional. Goldman's architecture logs every decision with full context, creating an audit trail that regulators can review.

# Example: Structured decision logging for AI agents

class AuditableDecision:

def __init__(self, agent_id, task_type):

self.agent_id = agent_id

self.task_type = task_type

self.timestamp = datetime.utcnow()

self.inputs = {}

self.reasoning_steps = []

self.decision = None

self.confidence = None

self.human_review_required = False

def log_reasoning_step(self, step_description, evidence):

self.reasoning_steps.append({

"step": step_description,

"evidence": evidence,

"timestamp": datetime.utcnow()

})

def finalize(self, decision, confidence):

self.decision = decision

self.confidence = confidence

self.human_review_required = confidence < CONFIDENCE_THRESHOLD

self._persist_to_audit_log()Even if you are not in a regulated industry, building auditability into your AI systems protects you from errors, builds trust with stakeholders, and creates the data you need to improve the system over time.

4. Measure Everything, but Measure the Right Things

Goldman tracks onboarding time reduction, developer productivity, and labor hours saved. These are outcome metrics, not activity metrics. Too many AI deployments measure things like "number of AI interactions" or "tokens processed" without connecting them to business outcomes.

Define your success metrics before deployment:

- What specific business outcome will this AI improve?

- How will you measure the baseline (pre-AI performance)?

- What is the minimum improvement that justifies the investment?

- How will you detect if the AI's performance degrades over time?

5. Plan for Human-AI Collaboration, Not Replacement

Goldman explicitly stated that the AI agents are not intended to replace workers. The agents handle routine volume work while humans focus on exceptions, complex decisions, and oversight. This is both a practical design choice (AI is not yet reliable enough for fully autonomous high-stakes decisions) and a strategic one (it reduces organizational resistance to adoption).

When designing your AI workflows, build explicit handoff points where human judgment is required. Define clear escalation criteria. And train your human teams not just on how to use the AI tools, but on how to effectively review and override AI decisions when necessary.

What Comes Next

Goldman has indicated that future agent development may target areas like employee surveillance (monitoring for compliance violations in communications) and investment banking pitchbook creation. The pattern is clear: start with well-defined, high-volume back-office processes, prove the value, then expand to more complex and judgment-intensive domains.

For the broader financial services industry, Goldman's deployment is likely to trigger a wave of similar partnerships. JPMorgan, Morgan Stanley, and other major banks will face competitive pressure to match Goldman's efficiency gains. Anthropic, OpenAI, and Google will all be courting these institutions.

For enterprise developers outside financial services, the lessons are universal. The combination of embedded partnership development, focused use case selection, rigorous auditability, and human-AI collaboration represents a mature approach to enterprise AI that goes far beyond the hype. Goldman's deployment is a template worth studying, regardless of your industry.

Comments