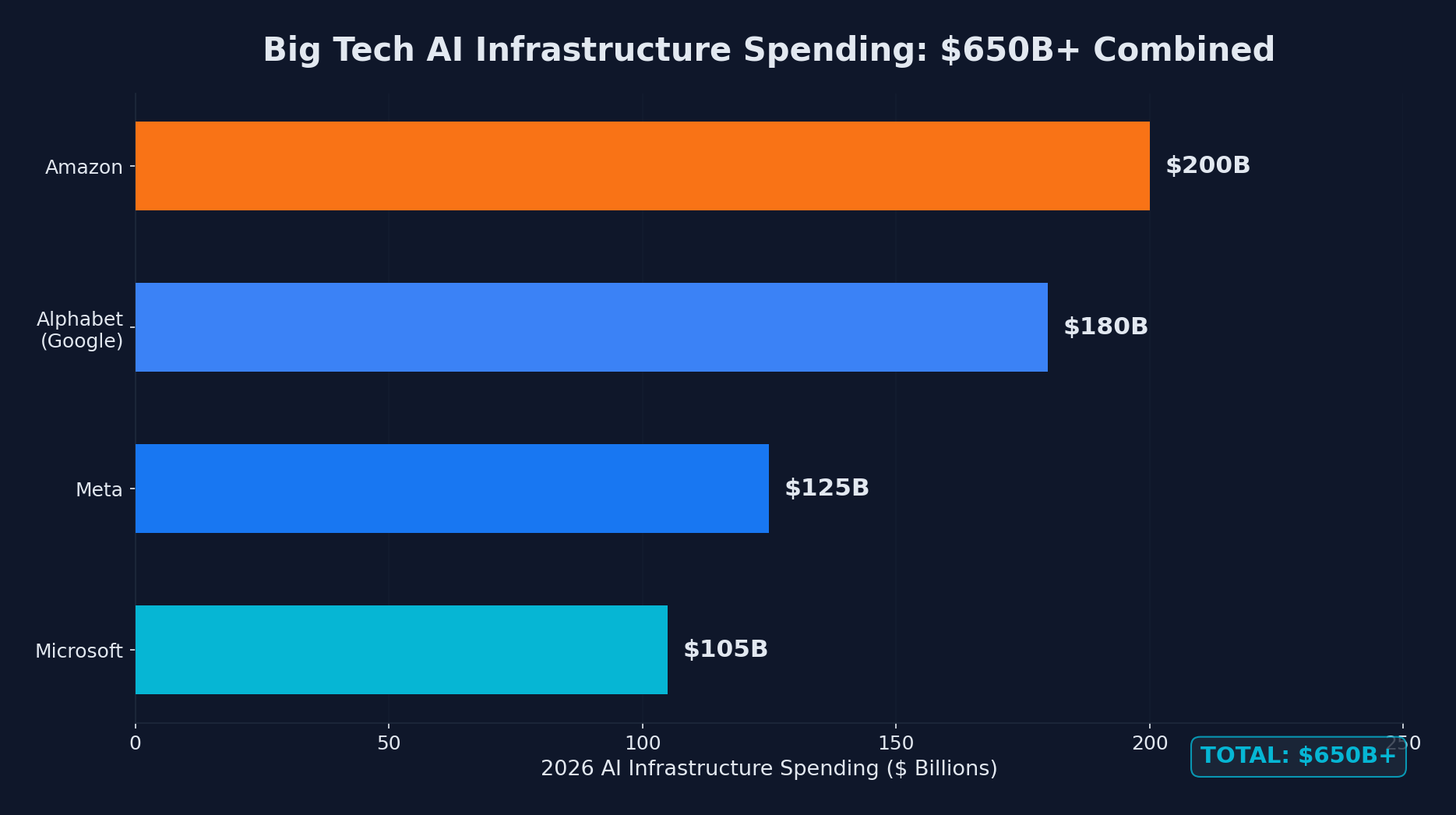

The numbers are staggering even by Big Tech standards. Four of the largest US technology companies, Amazon, Alphabet (Google), Meta, and Microsoft, have collectively forecast capital expenditures approaching $650 billion for 2026. This is a boom without parallel this century, with each company's individual spending expected to match or exceed their combined budgets for the past three years.

For developers, this is not just a Wall Street story. This infrastructure buildout will directly shape the tools you use, the platforms you deploy on, and the capabilities available to you for years to come. Here is a detailed breakdown of who is spending what, what they are building, and what it means for the developer ecosystem.

The Numbers: Company by Company

2026 AI infrastructure spending: Amazon leads at $200B, followed by Alphabet, Meta, and Microsoft

2026 AI infrastructure spending: Amazon leads at $200B, followed by Alphabet, Meta, and Microsoft

Amazon: $200 Billion

Amazon leads the pack with a planned $200 billion in capital expenditures for 2026, a 60% increase from $125 billion in 2025. The number exceeded analyst expectations by roughly $50 billion, causing Amazon shares to drop more than 10% in after-hours trading as investors processed the scale of the commitment.

CEO Andy Jassy made it clear on the earnings call that the vast majority of this spending is directed at AWS. The investment includes:

- Data center expansion: New facilities across multiple continents to meet exploding demand for AI compute

- Custom silicon: Continued development of Trainium AI training chips and Graviton processors, expected to generate over $10 billion in revenue in 2026

- Project Rainier: One of the largest operational AI clusters in the world, built in partnership with Anthropic

- Networking infrastructure: Massive fiber and interconnect buildouts to support the data throughput AI workloads demand

For developers, this means AWS will continue expanding its AI service catalog. Expect more powerful instances with Trainium chips, tighter Bedrock integrations, and lower latency for AI inference across more regions.

Alphabet (Google): $180 Billion

Google's parent company plans approximately $180 billion in spending, a remarkable 97% year-over-year increase from $92 billion in 2025. This is the most aggressive growth rate among the four companies.

Google's spending is focused on:

- TPU v6 and beyond: Google's custom Tensor Processing Units continue to be a cornerstone of their AI infrastructure strategy, powering both internal models (Gemini) and Cloud TPU offerings for external developers

- Google Cloud data centers: Expansion in 11 new regions announced for 2026, bringing AI compute closer to users globally

- Gemini infrastructure: The compute required to train and serve Google's frontier Gemini models, which power everything from Search to Cloud AI products

- Subsea cables and networking: Google continues to invest heavily in global network infrastructure, including new subsea cable routes connecting Asia, Europe, and the Americas

For developers, Google Cloud Platform is likely to offer increasingly competitive pricing on AI inference and training, driven by the efficiency gains from custom TPU hardware. Vertex AI and Gemini API capabilities should expand significantly throughout the year.

Meta: $115-135 Billion

Meta's spending range of $115 to $135 billion reflects both its massive AI ambitions and continued investment in Reality Labs (AR/VR). This represents a significant jump from roughly $60 billion in 2025.

Meta's investment priorities include:

- Llama model training: The compute required to train and iterate on Meta's open-source Llama family of models, which have become a cornerstone of the open-source AI ecosystem

- AI-powered products: Infrastructure to serve AI features across Facebook, Instagram, WhatsApp, and Threads, reaching billions of users

- Reality Labs: Continued investment in AR/VR hardware and the metaverse vision, though this remains a smaller share of total capex

- Research compute: Meta AI Research remains one of the most prolific AI research organizations, requiring substantial compute resources

For developers, Meta's commitment to open-source models is perhaps the most directly impactful. The infrastructure buildout ensures that future Llama models will be competitive with closed-source alternatives, giving developers powerful foundation models they can run on their own infrastructure.

Microsoft: $105 Billion

Microsoft plans approximately $105 billion in capex for the fiscal year ending June 2026. While the smallest absolute number among the four, this still represents an enormous commitment, roughly double the company's spending just two years ago.

Microsoft's spending focuses on:

- Azure AI infrastructure: Expanding GPU and custom chip capacity across Azure's global network of data centers

- OpenAI partnership: Supporting the compute requirements of OpenAI's model training and the Azure OpenAI Service

- Copilot infrastructure: The compute powering GitHub Copilot, Microsoft 365 Copilot, and other Copilot products across Microsoft's portfolio

- Sovereign cloud: Building dedicated AI infrastructure in countries and regions with data sovereignty requirements

For developers, Microsoft's investment translates to more powerful Azure OpenAI Service offerings, expanded GitHub Copilot capabilities, and broader availability of AI-accelerated compute in Azure regions worldwide.

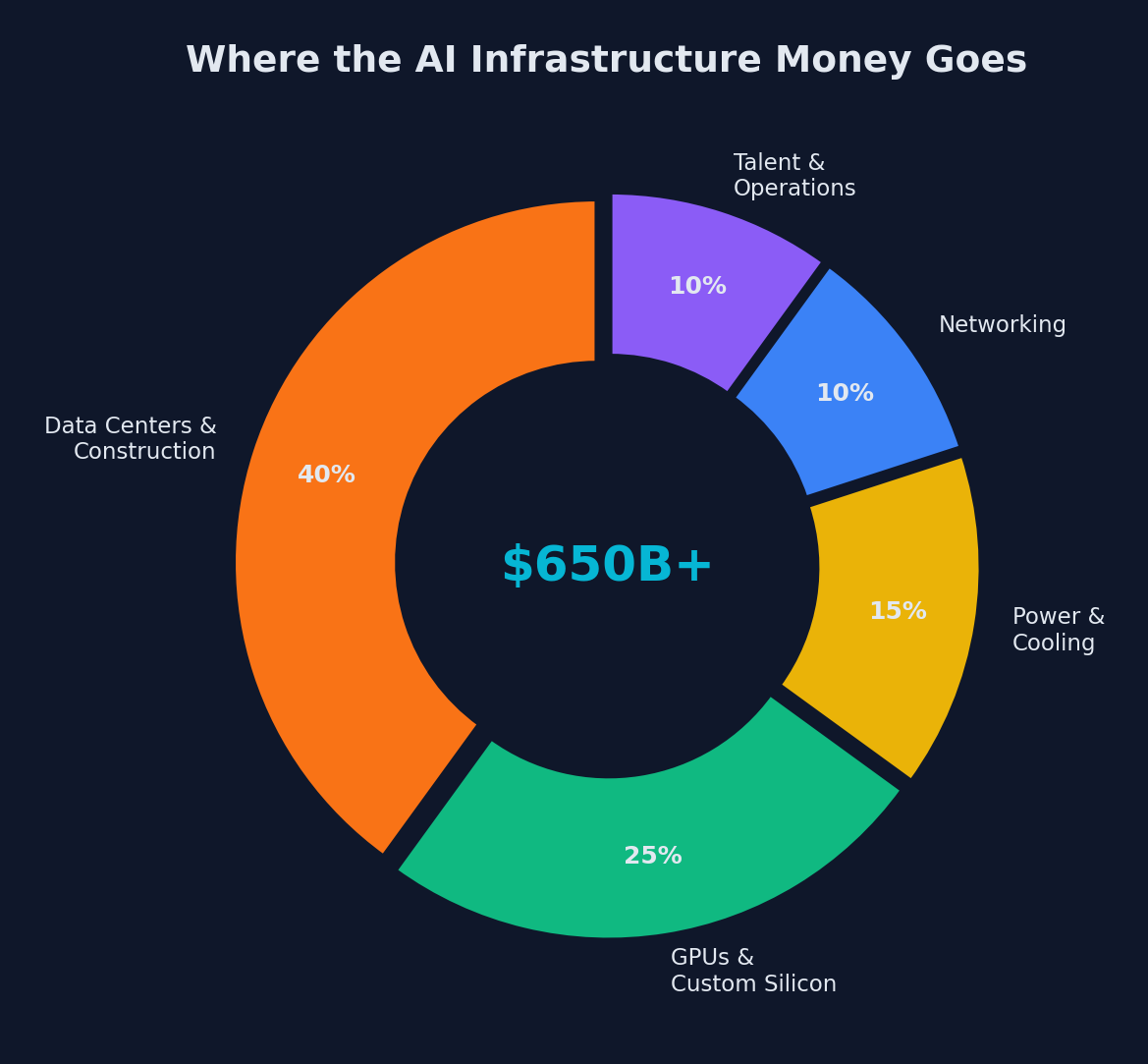

Where Is All This Money Going?

Where the $650B+ goes: data centers and construction dominate at 40%

Where the $650B+ goes: data centers and construction dominate at 40%

To understand the scale, consider what $650 billion actually buys:

Data Centers

The primary expenditure is physical data center construction. A single large-scale AI data center can cost $5-10 billion. These are not typical server farms. AI-optimized data centers require:

- Specialized power: AI training clusters consume enormous amounts of electricity. A single large training run can consume as much power as a small city. Companies are investing in nuclear power agreements, solar farms, and novel cooling technologies.

- Advanced cooling: GPU and TPU clusters generate tremendous heat. Liquid cooling, immersion cooling, and even purpose-built cooling towers are becoming standard.

- High-bandwidth networking: AI training requires massive data throughput between nodes. Custom networking hardware and topologies are necessary to keep thousands of accelerators fed with data.

Custom Silicon

All four companies are investing heavily in custom AI chips to reduce dependence on NVIDIA and improve the cost efficiency of their workloads:

| Company | Custom Chip | Purpose |

|---|---|---|

| Amazon | Trainium 3 | AI training and inference |

| TPU v6 | Training, inference, and cloud offerings | |

| Meta | MTIA v3 | Inference for recommendation and ranking |

| Microsoft | Maia 200 | Azure AI workloads |

Talent

A significant portion of spending goes to hiring and retaining AI researchers and engineers. Compensation packages for senior AI researchers now routinely exceed $5 million annually, and the competition for top talent has never been fiercer.

Why the Numbers Keep Growing

Several factors are driving the acceleration:

1. AI model scaling shows no signs of slowing. Each generation of frontier models requires roughly 3-5x more compute than its predecessor. Companies are competing to train the most capable models, and falling behind in compute means falling behind in capability.

2. Inference demand is exploding. While training gets the headlines, the compute required to serve AI models to users is growing even faster. Every ChatGPT query, every Copilot suggestion, every AI-generated image requires inference compute. As AI features are embedded in more products reaching more users, inference costs scale linearly with usage.

3. Enterprise adoption is accelerating. Cloud AI revenues are growing at 40-60% annually across all major providers. Enterprises are moving from AI experiments to production deployments, driving sustained demand for compute capacity.

4. Competitive dynamics prevent anyone from slowing down. No company wants to be the one that underinvested and lost the AI race. The prisoner's dilemma dynamic means everyone keeps spending, regardless of short-term returns.

What This Means for Developers

More Powerful and Affordable AI APIs

The massive infrastructure buildout will eventually translate to better and cheaper AI services. As custom chips come online and data centers achieve scale, the cost per inference token will continue to decline. This makes AI-powered features increasingly viable for smaller companies and individual developers.

Regional Availability

The geographic expansion of AI-capable data centers means developers worldwide will have access to low-latency AI services. If you are building AI-powered applications in Southeast Asia, South America, or Africa, expect significantly improved latency and compliance options by the end of 2026.

New Development Patterns

The abundance of cheap inference compute enables new architectural patterns:

# AI-powered middleware is becoming practical at scale

async def smart_cache_middleware(request):

"""Use AI to determine optimal caching strategy per request."""

cache_decision = await ai_model.predict_cache_strategy(

endpoint=request.path,

user_segment=request.user.segment,

historical_patterns=get_access_patterns(request.path)

)

if cache_decision.should_cache:

return cached_response(request, ttl=cache_decision.ttl)

return await process_request(request)Patterns like this, where AI is used for real-time operational decisions, become economically viable as inference costs drop.

Open-Source Model Ecosystem

Meta's continued investment in open-source Llama models, backed by over $100 billion in infrastructure, means developers have access to increasingly powerful models they can self-host. This is particularly important for applications with data privacy requirements or latency-sensitive workloads.

The Skeptic's View

Not everyone is convinced this spending is sustainable. Critics point to several concerns:

Revenue justification: The combined AI revenue of these four companies does not yet come close to justifying $650 billion in annual spending. While cloud revenues are growing rapidly, the return on investment remains uncertain for many AI products.

Overcapacity risk: If demand does not materialize as expected, the industry could face a glut of AI compute capacity. This has happened before in technology, with the fiber optic bubble of the early 2000s being the most cited parallel.

Energy constraints: The power requirements of AI data centers are straining electrical grids in some regions. Securing reliable, affordable power is becoming a bottleneck that money alone cannot solve quickly.

Diminishing returns from scale: Some researchers argue that simply scaling models larger is reaching a point of diminishing returns, and future breakthroughs will come from algorithmic innovations rather than brute-force compute. If true, the current level of spending may be excessive.

Looking Ahead

Whether or not every dollar of this $650 billion investment generates returns, the infrastructure being built will fundamentally reshape the technology landscape. The data centers, custom chips, and networking infrastructure will serve the industry for decades.

For developers, the practical advice is straightforward:

- Build with AI APIs now. The infrastructure investment ensures these services will be available, reliable, and increasingly affordable.

- Learn to optimize AI costs. As AI becomes embedded in more applications, understanding how to minimize inference costs while maintaining quality will be a valuable skill.

- Stay cloud-flexible. With all four major providers investing heavily, competition will keep pricing competitive. Avoid deep lock-in to any single provider.

- Watch the open-source space. Meta's commitment to open-source models means self-hosted AI will remain viable and improve rapidly.

The $650 billion question is not whether AI infrastructure will be useful but whether this specific level of spending will generate commensurate returns. History suggests that infrastructure booms tend to overshoot in the short term but undershoot in the long term. The fiber optic cables laid during the dot-com bubble eventually powered the internet economy. The AI infrastructure being built today will likely power applications we cannot yet imagine.

Comments