Apple and Google have announced a multi-year partnership to power the next generation of Siri with Google's Gemini AI. The upgraded Siri will debut in iOS 26.4, with the beta available in late February 2026 and a full public release in March or early April.

This is the most significant change to Siri since its 2011 launch — and one of the most surprising partnerships in tech history.

What Is Changing

The current Siri is primarily a command-based assistant. It handles structured requests well — "Set a timer for 10 minutes," "Call Mom," "What is the weather?" — but struggles with conversational interactions, multi-turn dialogues, and complex reasoning.

The Gemini-powered Siri will be fundamentally different:

| Capability | Current Siri | Gemini Siri |

|---|---|---|

| Conversation memory | Single turn | Multi-turn with context |

| Complex queries | Limited | Full reasoning |

| Follow-up questions | Poor | Natural |

| Creative tasks | Minimal | Full support |

| Code assistance | None | Available |

| Document analysis | None | Available |

The new Siri can maintain context across a conversation, handle ambiguous requests, and perform complex reasoning tasks that current Siri simply cannot do.

How the Partnership Works

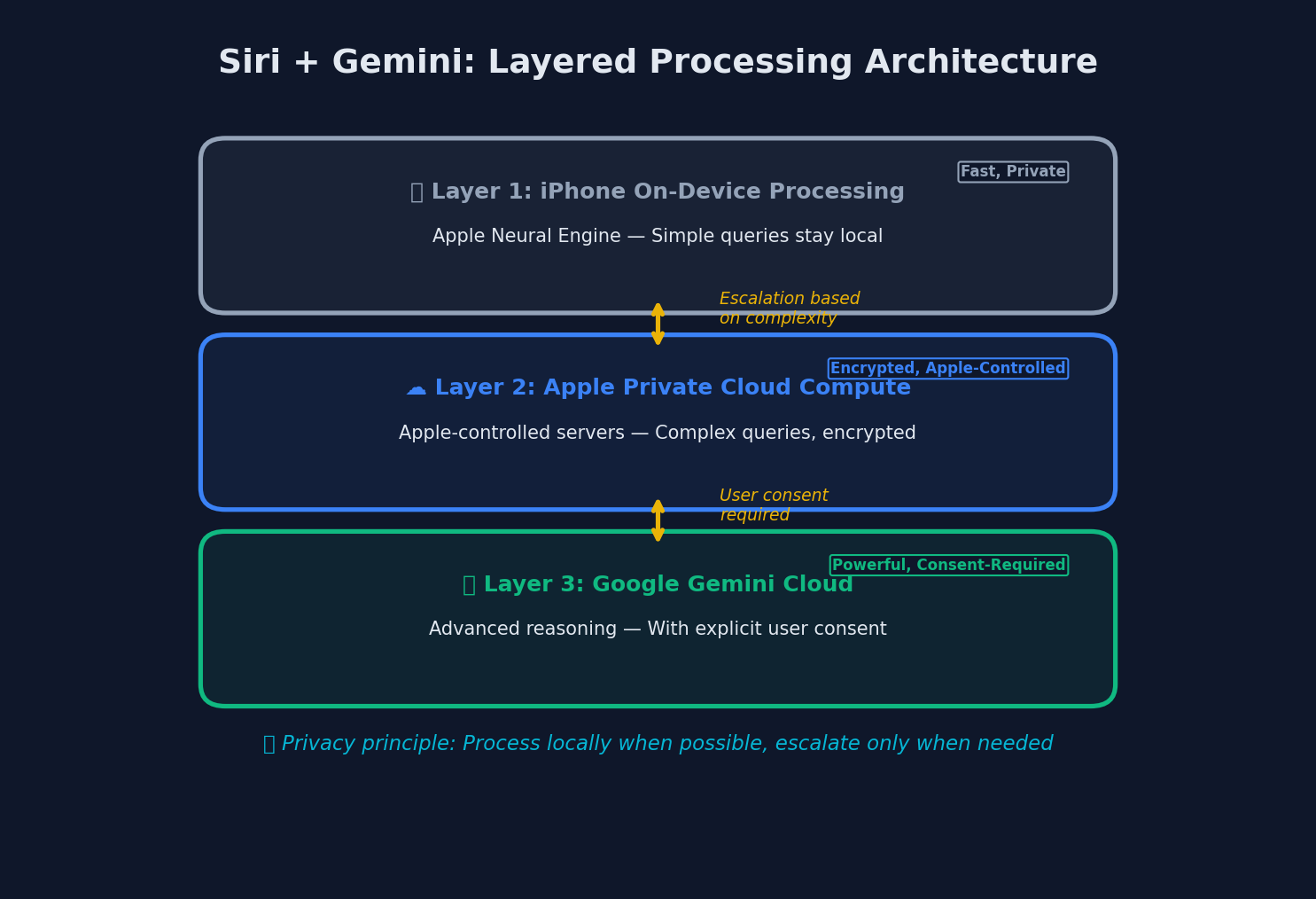

Three-layer processing: queries escalate from device to Apple cloud to Gemini based on complexity

Three-layer processing: queries escalate from device to Apple cloud to Gemini based on complexity

The technical architecture splits responsibilities between Apple and Google:

Gemini-Powered Siri Architecture

├── On-Device Processing (Apple)

│ ├── Wake word detection ("Hey Siri")

│ ├── Basic commands (timers, calls, device controls)

│ ├── Privacy filtering and PII redaction

│ └── Audio processing and transcription

│

├── Cloud Processing (Google Gemini)

│ ├── Complex reasoning and analysis

│ ├── Multi-turn conversation management

│ ├── Knowledge retrieval and synthesis

│ └── Creative and generative tasks

│

└── Integration Layer (Apple)

├── App Intents framework

├── System action execution

├── Response formatting and voice synthesis

└── Privacy controls and user preferencesSimple commands continue to process on-device for speed and privacy. Complex queries route to Google's Gemini infrastructure, with Apple controlling what data is sent and how responses are integrated.

The Privacy Question

Apple has built its brand on privacy. Partnering with Google — a company that makes most of its revenue from advertising based on user data — raises obvious questions.

Apple addressed this directly in their announcement:

1. Data minimization: Only the minimum necessary context is sent to Gemini. Personal identifiers are stripped before transmission.

2. No advertising use: Google contractually cannot use Siri query data for advertising targeting or model training.

3. Opt-out available: Users can disable Gemini integration entirely and use on-device Siri only (with reduced capabilities).

4. Transparency: iOS will indicate when a query is being processed by Gemini versus on-device.

Whether users trust these assurances will determine adoption. Apple is betting that the capability improvements outweigh privacy concerns for most users.

Why Apple Chose Google

Apple has its own AI research division and has been developing on-device models for years. Why partner with Google instead of building in-house?

1. Time to market. Apple's internal AI efforts are years behind the frontier. Siri has been losing ground to ChatGPT and Claude, and Apple needed a solution now, not in 2028.

2. Infrastructure costs. Running frontier AI models requires massive GPU clusters. Google already has this infrastructure; building it from scratch would cost Apple tens of billions.

3. Model quality. Gemini 2.5 is competitive with GPT-5 and Claude Opus. Apple's internal models are not at this level.

4. Existing relationship. Apple and Google already have a $20+ billion annual deal for Safari default search. This partnership extends an existing commercial relationship.

The partnership is a tacit admission that Apple fell behind in the AI race — and a pragmatic decision to buy capability rather than build it.

What Users Will Experience

In iOS 26.4, Siri interactions will feel dramatically different:

Conversational flow:

User: "What was that movie we were talking about last week?"

Siri: "You asked me about 'Dune: Part Three' on Tuesday.

You wanted to know the release date — it's March 15th.

Would you like me to add it to your calendar or

find showtimes?"Complex reasoning:

User: "I have a meeting in Tokyo at 9am their time on Thursday.

What time should I wake up to be ready, accounting for

jet lag adjustment?"

Siri: "Your Thursday meeting is 9am JST, which is 5pm Wednesday

your time in San Francisco. For jet lag, I'd recommend

arriving at least 2 days early. If you fly out Monday

evening, you should wake up at 6am Tokyo time on Wednesday

to start adjusting. Want me to look at flight options?"Creative assistance:

User: "Help me write a toast for my sister's wedding"

Siri: "I'd be happy to help. Tell me a bit about your sister —

what's her personality like? Any funny stories from

childhood you might want to reference? And what tone

are you going for — heartfelt, humorous, or a mix?"This is not Siri as we have known it. This is a conversational AI assistant that happens to be integrated into iOS.

Developer Impact

For iOS developers, the Gemini-powered Siri creates new opportunities:

App Intents expansion: The App Intents framework now supports richer interactions. Apps can expose complex actions that Siri can chain together intelligently.

Siri Shortcuts upgrade: Shortcuts can now include conversational steps where Siri gathers information through dialogue before executing.

SiriKit deprecation: Apple is moving developers from the older SiriKit framework to App Intents. SiriKit will be deprecated in iOS 27.

New capabilities:

- Document and image analysis within apps

- Multi-step workflows with user confirmation

- Context-aware suggestions based on app state

- Natural language app control

The Competitive Implications

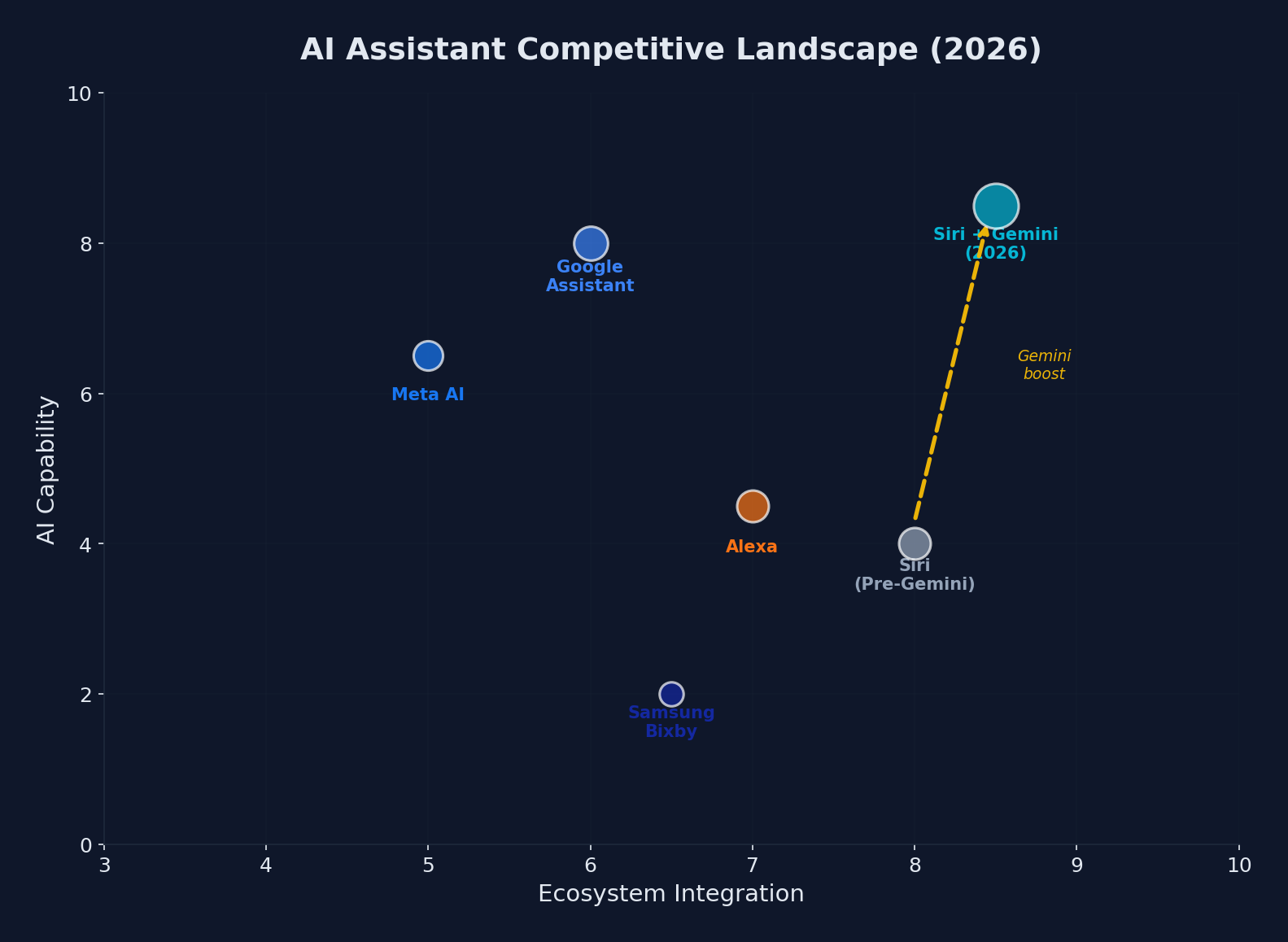

The Siri + Gemini partnership vaults Apple's assistant to the top of the capability-integration chart

The Siri + Gemini partnership vaults Apple's assistant to the top of the capability-integration chart

This partnership reshapes the AI assistant landscape:

| Assistant | AI Backend | Platform | Positioning |

|---|---|---|---|

| Siri | Gemini | iOS/macOS | Apple ecosystem integration |

| ChatGPT | GPT-5.2 | Cross-platform | General-purpose leader |

| Claude | Claude models | Cross-platform | Enterprise and developers |

| Gemini | Gemini | Android/Web | Google ecosystem |

| Copilot | GPT + custom | Windows | Microsoft ecosystem |

Apple choosing Gemini over GPT is a significant win for Google. It validates Gemini's quality and gives Google AI distribution on over 1.5 billion active Apple devices.

For OpenAI, it is a missed opportunity. They reportedly pitched Apple on a partnership but could not match Google's infrastructure capabilities and existing relationship.

The Broader Pattern

The Apple-Google deal reflects a broader trend: platform companies are partnering with AI labs rather than building frontier models themselves.

- Apple → Google Gemini

- Samsung → Google Gemini

- Amazon → Anthropic Claude (Alexa integration in development)

- Meta → Building in-house (Llama)

Building frontier AI requires specialized talent, massive compute infrastructure, and years of research. Most companies are concluding it is faster and cheaper to partner than to build.

Release Timeline

| Milestone | Date |

|---|---|

| iOS 26.4 Developer Beta | Late February 2026 |

| iOS 26.4 Public Beta | Early March 2026 |

| iOS 26.4 General Release | March/April 2026 |

| macOS integration | Following iOS release |

| Full Siri parity | iOS 27 (Fall 2026) |

The February beta will include core conversational capabilities. Additional features — including deeper app integration and on-device improvements — will roll out throughout 2026.

What This Means

Apple partnering with Google on Siri is an acknowledgment of how dramatically the AI landscape has shifted. Two years ago, Apple was confident it could build competitive AI in-house. Today, it is licensing capability from a competitor.

For users, the result is a dramatically more capable Siri. For the industry, it signals that the AI platform wars are far from settled — and that unexpected alliances are the new normal.

Comments